Distributed multi-agent target search and tracking with Gaussian process and reinforcement learning

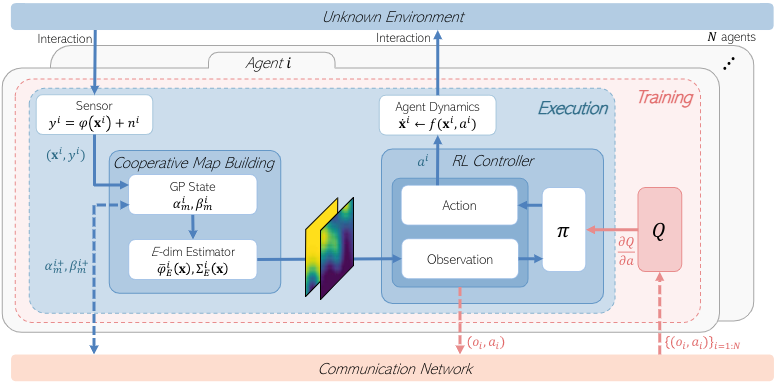

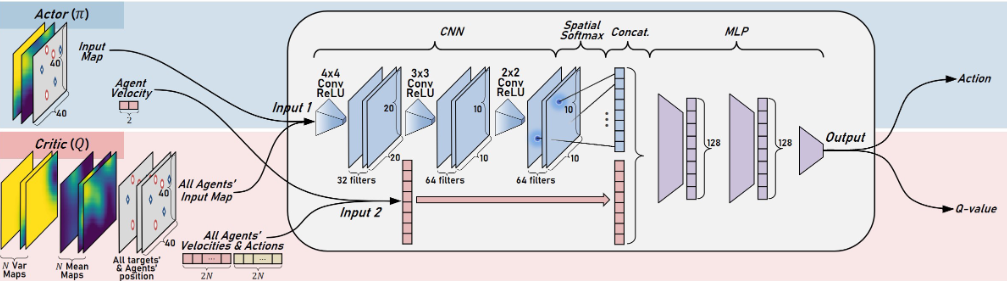

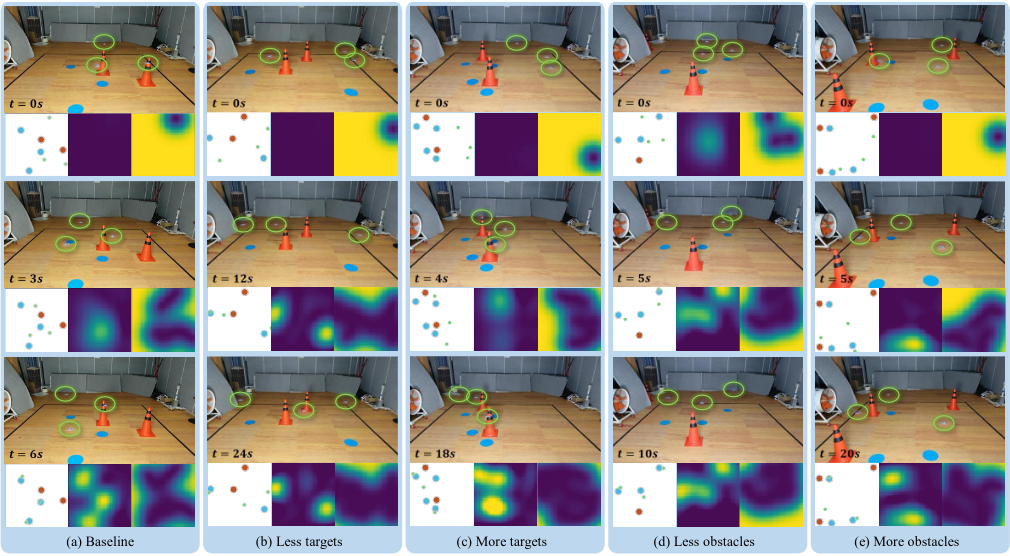

Abstract: Deploying multiple robots into unknown environments has many practical benefits, yet the challenge of planning over the unknown remains difficult to address. With recent advances in deep learning, intelligent control techniques such as reinforcement learning have enabled agents to learn autonomously from environment interactions with little to no prior knowledge. Such methods can address the exploration-exploitation tradeoff of planning over the unknown in a data-driven manner, eliminating the reliance on heuristics typical of traditional approaches and streamlining the decision-making pipeline with end-to-end training. In this paper, we propose a multi-agent reinforcement learning agent with cooperative map building based on distributed Gaussian process to tackle the partial-observability of multi-agent target search and tracking mission. The agent leverages the distributed Gaussian process which encodes belief over the target locations to efficiently plan over the unknown. We evaluate the performance and transferability of the trained policy in simulation and demonstrate the method on a swarm of micro unmanned aerial vehicles with hardware experiments.